Nominal Voltage vs Rated Voltage – Key Differences Explained Simply

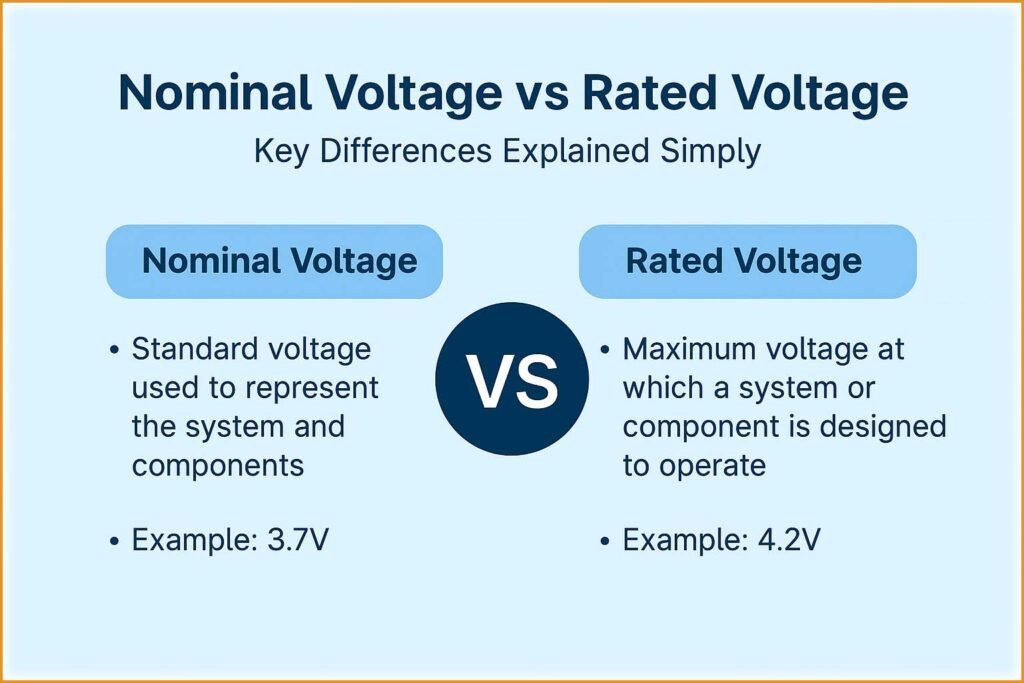

Electrical systems depend on voltage levels to operate safely and efficiently. However, when you read equipment specifications, you often see two terms: nominal voltage and rated voltage. At first glance, they may seem the same, but they serve very different purposes in design, manufacturing, and operation. Understanding the difference between nominal voltage vs rated voltage is essential for engineers, electricians, and even homeowners dealing with electrical installations.

Both terms describe voltage values, but their contexts are different. Nominal voltage is a general reference or standard value used for identification, while rated voltage is the specific maximum voltage that equipment can handle safely under normal conditions. The misunderstanding between the two can lead to design errors, inefficiency, or even equipment failure if not properly considered.

Table of Contents

Table of Contents

In this article, we will explain the meaning, difference, and importance of nominal voltage vs rated voltage, along with examples and comparison tables to make it easier to understand.

Key Takeaways

- Nominal voltage is the general or standard voltage used to define a system (e.g., 230V, 400V).

- Rated voltage is the exact voltage value that a device or component can operate safely and efficiently at.

- Nominal voltage is for identification, rated voltage is for performance and safety.

- Equipment designed for a particular rated voltage must match the nominal voltage of the system.

- Understanding both helps in selecting correct electrical equipment and maintaining long-term reliability.

Use our online tool Power Factor Correction Capacitor Calculator – Complete Technical Guide

Understanding Nominal Voltage

Nominal voltage refers to the approximate or designated voltage value assigned to a power system or electrical network. It doesn’t represent the exact operating voltage but serves as a reference point.

For example, when we say that a domestic power supply is 230V, that’s the nominal voltage of the system. In reality, the voltage may fluctuate between 220V and 240V depending on the load and supply conditions.

Nominal voltages are set by standards such as IEC (International Electrotechnical Commission) or IEEE, which ensure that electrical systems around the world follow consistent naming conventions. These standard voltages allow compatibility among equipment, ensuring devices can work across regions with minor variations.

For example, in the United States, nominal voltages are 120V, 240V, and 480V for common systems, while in Europe and many parts of Asia, 230V and 400V are standard nominal voltages.

Understanding Rated Voltage

Rated voltage, on the other hand, refers to the maximum continuous voltage that electrical equipment, such as transformers, motors, or circuit breakers, can handle safely without exceeding its design limits. I

t is determined by the manufacturer after thorough testing and is usually printed on the device nameplate.

For example, if a motor is rated at 415V, it means it can safely operate up to that voltage. Operating it above that level can cause overheating, insulation damage, or even failure. Similarly, a capacitor rated at 440V means it can continuously work up to that voltage without degradation of its dielectric material.

The rated voltage ensures that the device performs optimally and has a long service life. It accounts for insulation strength, temperature rise, and dielectric safety margins.

Nominal Voltage vs Rated Voltage – Comparison Table

| Parameter | Nominal Voltage | Rated Voltage |

|---|---|---|

| Definition | Standard or reference voltage for a system | Maximum safe operating voltage for a device |

| Purpose | Used for identifying and classifying electrical systems | Used to ensure equipment operates within safe limits |

| Example | 230V (household system) | 220V (fan motor), 415V (industrial motor) |

| Determined By | Electrical system standards (IEC, IEEE) | Manufacturer testing and design |

| Voltage Variation | Actual voltage may vary around nominal | Must not exceed rated value |

| Used For | System identification and compatibility | Equipment performance and protection |

| Tolerance | ±5% to ±10% allowed variation | Typically no tolerance – must remain at or below rated value |

Know more about Off-Grid Solar System Design Guide for Remote Areas

How Nominal Voltage and Rated Voltage Work Together

Electrical systems and equipment are designed to complement each other. For instance, a 230V nominal system supplies power to equipment rated for 220V–240V. The rated voltage of the equipment is chosen to match the expected voltage range of the nominal system.

This coordination ensures that even if the supply voltage fluctuates slightly, the equipment remains within its safe operating range. Therefore, nominal voltage acts as the system benchmark, while rated voltage defines the equipment’s operating limits.

For example:

- In a 400V three-phase system, motors may have a rated voltage of 415V, designed to work safely with nominal variations.

- A 230V nominal lighting circuit can safely power lamps rated for 220V, as the difference falls within permissible tolerance limits.

Example of Nominal Voltage vs Rated Voltage in Real Systems

Let’s consider a distribution transformer. The system it serves might have a nominal voltage of 11kV/415V. However, the transformer itself could have a rated primary voltage of 11kV and rated secondary voltage of 433V.

Here, the nominal voltage (415V) represents the general system voltage, while the rated voltage (433V) accounts for expected voltage drops during load conditions. This ensures that even when voltage dips occur in cables or loads, the end user still receives approximately the nominal voltage value.

This is a practical design approach that ensures stable and reliable power delivery.

Know more about Short Circuit Calculation Methods: IEC vs ANSI

Why the Difference Matters in Engineering Design

Understanding the distinction between nominal voltage vs rated voltage is crucial in power system design and equipment selection. Engineers use nominal voltage when planning electrical networks, such as defining cable insulation levels, switchgear ratings, and transformer connections.

Manufacturers, however, rely on rated voltage to design equipment that can operate safely within that system. Mismatch between nominal and rated values can cause several problems:

- Overheating due to excessive voltage.

- Reduced equipment life from insulation breakdown.

- Operational inefficiency due to incorrect power delivery.

- Failure to meet safety codes or standards.

Hence, every electrical installation must balance system voltage (nominal) with equipment voltage (rated) to maintain reliability and efficiency.

International Standard Nominal Voltages

| Region | Common Nominal Voltages | Frequency (Hz) |

|---|---|---|

| North America | 120V / 240V / 480V | 60 |

| Europe | 230V / 400V | 50 |

| Asia (including Pakistan, India) | 230V / 400V | 50 |

| Japan | 100V / 200V | 50 / 60 |

| Australia | 230V / 400V | 50 |

These voltages are “nominal,” meaning actual system voltages can slightly deviate depending on generation and load factors.

Example of Rated Voltage in Common Equipment

| Equipment Type | Rated Voltage (V) | Remarks |

|---|---|---|

| Domestic fan | 220V | Matches 230V nominal system |

| Industrial motor | 415V | Used with 400V nominal 3-phase system |

| Transformer (Distribution) | 11kV / 433V | Serves 11kV/415V network |

| Capacitor bank | 440V | Rated higher for safety margin |

| Circuit breaker | 415V or 690V | Rated to handle system nominal voltage safely |

This shows how manufacturers align equipment ratings with standard nominal system voltages.

Know more about Transformer Cooling Methods: ONAN, ONAF, OFAF & More

Voltage Tolerance and Operating Range

In real-world applications, supply voltages fluctuate. For example, a 230V nominal system can vary from 207V to 253V, depending on utility conditions. Equipment rated at 220V or 240V can safely operate in that range without performance issues.

Standards like IEC 60038 define acceptable tolerances for voltage variations, ensuring all equipment works harmoniously within the electrical grid.

This design flexibility allows consistent operation even under load variations or temporary voltage drops.

Nominal Voltage vs Rated Voltage – In Simple Terms

If we simplify:

- Nominal voltage = The system’s nameplate value (e.g., “This house has a 230V supply”).

- Rated voltage = The device’s safe operating value (e.g., “This bulb works best at 220V”).

Think of nominal voltage as a “city speed limit” sign, and rated voltage as your car’s safe speed capacity. You drive within the system’s limits to ensure safety and performance.

Know more about Star Delta Transformer Fault Current Distribution Explained

Conclusion

The difference between nominal voltage vs rated voltage is not just theoretical — it’s practical and essential. Nominal voltage represents the system’s standard value used for identification and compatibility, while rated voltage defines the safe and efficient operating limit of electrical equipment.

When engineers design power systems or choose components, they must ensure rated voltages match the nominal voltages of the network. This ensures equipment longevity, safety, and system reliability.

Follow Us on Social:

Subscribe our Newsletter on Electrical Insights for latest updates from Electrical Engineering Hub

#NominalVoltage, #RatedVoltage, #ElectricalEngineering, #PowerSystems, #VoltageRating, #ElectricCircuits, #ElectricalSafety, #EngineeringBasics, #ElectricalConcepts, #VoltageDifference, #ElectricPower, #TransformerBasics, #IndustrialElectrical, #LearnElectrical, #EnergyEfficiency